Debunking McKinsey's Software Engineering Productivity Metrics: A Technical Perspective

Debunking McKinsey's Software Engineering Productivity Metrics: A Technical Perspective

TL;DR;

McKinsey's software engineering productivity metrics are overly simplistic and misaligned with DevOps principles. They focus too much on individual output, neglecting the complexities and collaborative nature of software engineering. Metrics like DORA's are more effective as they emphasize team outcomes and align with DevOps practices. Adopting McKinsey's approach could lead to reduced collaboration and a focus on gaming the system rather than actual productivity.

What's this about?

McKinsey has recently published an article on measuring software developer productivity. While some points are agreeable, the core recommendations severely miss the mark, often offering a dangerously simplistic view of productivity metrics. here, I'm sharing my view based on my experience on why McKinsey's recommendations are flawed, particularly from a DevOps and technical management perspective.

The Wrong Premises

Table 1: McKinsey's Questionable Assumptions

| Assumption | Critique |

| Sales metrics are better than software metrics | Just as measuring sales solely by revenue can be misleading, software productivity isn't just lines of code. |

| Individual productivity matters most | This negates the complexity of software engineering, which is a team effort. |

| Generic metrics can effectively measure productivity | Context matters; what works for one team might not work for another. |

DORA Metrics vs. McKinsey's Proposal

Table 2: Comparing Validity of Metrics

| Metrics | Validity | Reasoning |

| DORA Metrics | High | Backed by empirical evidence, focuses on outcomes like deploy frequency, lead time, MTTR, and change failure rate. |

| McKinsey Metrics | Low | Artificially aligned, contextually shallow, and includes individual metrics that are easily gamable. |

Dora metrics - Image by Google

Dora metrics - Image by Google

DORA Metrics and DevOps

DORA metrics align well with DevOps principles:

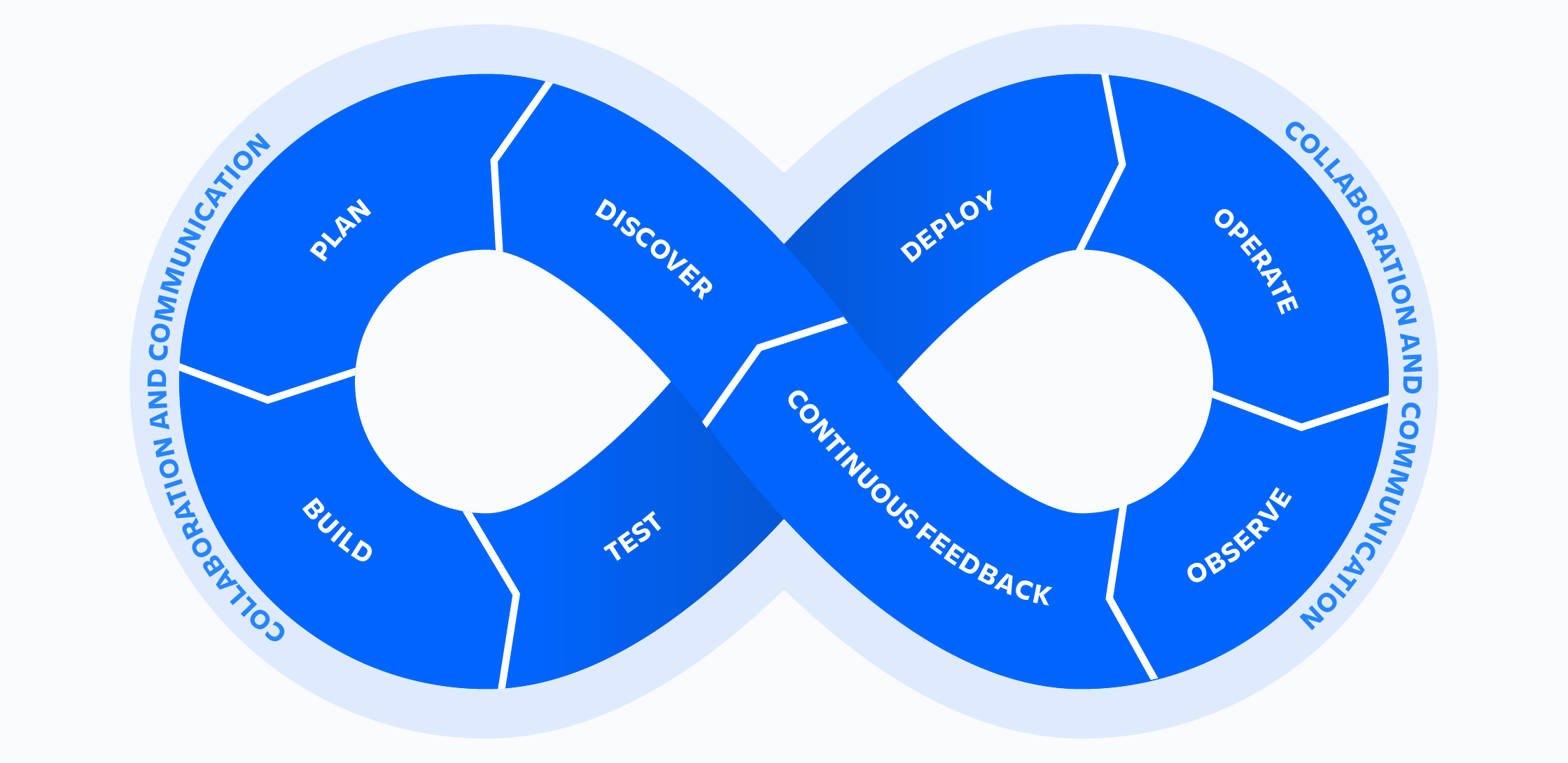

DevOps Loop - Image by Atlassian

DevOps Loop - Image by Atlassian

- Fast feedback loops: Lead time and deploy frequency give insight into how quickly a team can go from idea to production.

- Reduced risk: MTTR (Mean Time to Recover) and change failure rate metrics correlate with system robustness.

The Problem with Individual Metrics

McKinsey suggests metrics like "velocity index benchmark," "contribution analysis," and "talent capability scores," among others. From a DevOps standpoint, these are counterproductive for several reasons:

- Reduced Collaboration: Teams may opt for quick fixes over important tasks like pipeline optimization, which has a long-term impact on productivity.

- Learned Helplessness: Engineers become oriented around "gaming the system" rather than focusing on value-added work.

How to Truly Measure Productivity

Culture and Process Over Individuals

Trust and good organizational practices often have a more significant impact on team success than individual brilliance.

Real-World DevOps Measures

- Pipeline Efficiency: Time taken from code check-in to deployment.

- Rollback Rates: Frequency of rollbacks as an indicator of code quality.

- Incident Metrics: Time to acknowledge and resolve incidents.

Benefits of Using DORA Metrics

Decision-making Efficiency:

- Focuses on key metrics, filtering out noise.

- Aids in prioritizing impactful changes.

Value Delivery:

- Directly correlates with product quality.

- Identifies bottlenecks for targeted improvement.

Continuous Improvement:

- Serves as a performance baseline.

- Highlights actionable areas for agile and lean practices.

Conclusion

I believe that McKinsey's proposals are not only failing to capture what makes a software engineering team productive but can be damaging if misapplied. The research that has been done behind the book Accelerate and the evolution that DevOps has brought us are all telling us that is less about numerical metrics and more about cultivating a culture where smart practices, continual learning, and team collaboration are the yardsticks of success.